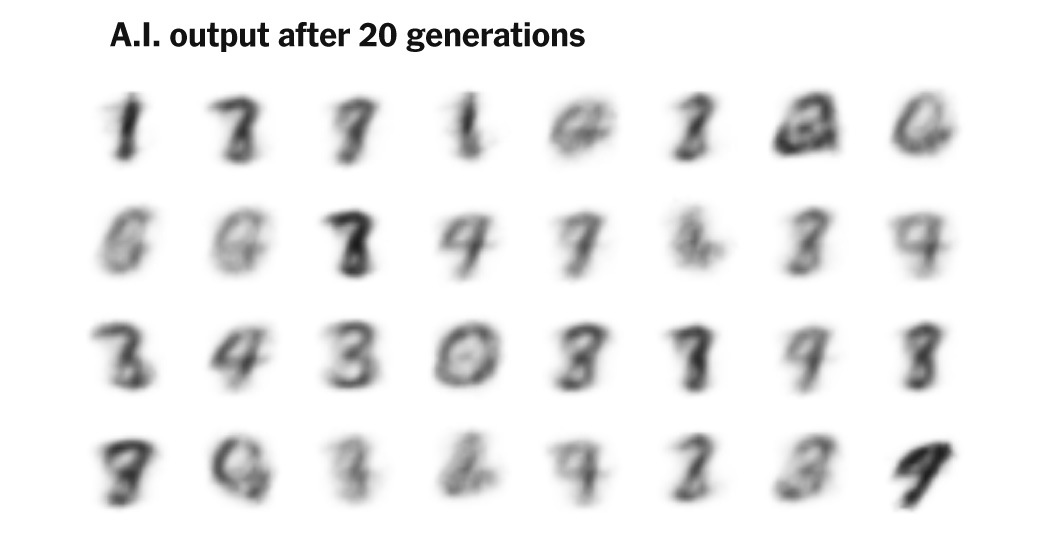

Inbreeding

What are you doing step-AI?

Are you serious? Right in front of my local SLM?

My takeaway from this is:

- Get a bunch of AI-generated slop and put it in a bunch of individual

.htmfiles on my webserver. - When my bot user agent filter is invoked in Nginx, instead of returning

444and closing the connection, return a random.htmof AI-generated slop (instead of serving the real content) - Laugh as the LLMs eat their own shit

- ???

- Profit

I might just do this. It would be fun to write a quick python script to automate this so that it keeps going forever. Just have a link that regens junk then have it go to another junk html file forever more.

- Get a bunch of AI-generated slop and put it in a bunch of individual

Hahahahaha

AI doing to job of poisoning itself

They call this scenario the Habsburg Singularity

Imo this is not a bad thing.

All the big LLM players are staunchly against regulation; this is one of the outcomes of that. So, by all means, please continue building an ouroboros of nonsense. It’ll only make the regulations that eventually get applied to ML stricter and more incisive.

Maybe this will become a major driver for the improvement of AI watermarking and detection techniques. If AI companies want to continue sucking up the whole internet to train their models on, they’ll have to be able to filter out the AI-generated content.

“filter out” is an arms race, and watermarking has very real limitations when it comes to textual content.

I’m interested in this but not very familiar. Are the limitations to do with brittleness (not surviving minor edits) and the need for text to be long enough for statistical effects to become visible?

Yes — also non-native speakers of a language tend to follow similar word choice patterns as LLMs, which creates a whole set of false positives on detection.

So now LLM makers actually have to sanitize their datasets? The horror…

I don’t think that’s tractable.

Anyone old enough to have played with a photocopier as a kid could have told you this was going to happen.

Blinks slowly

But, but, I have a photocopier now…

So then you know what happens when you make a copy of a copy of a copy and so on. Same thing with LLMs.